The Third Revolution in Defense comes from everyday devices. With brains inside

The world has changed, and the protagonists of this change are small, agile, laser-focused startups that quickly innovate and deploy products in the market at a fraction of the cost of what a large enterprise can do. The ‘democratization of high tech innovation’ is a pervasive trend in both commercial and defense applications, and the trend has been picked up by the US Department Of Defense (DoD).

That was, in essence, the message coming from a Defense Innovation Unit Experimental (DIUx) conference in Silicon Valley last January, where Neurala was one of only six highly innovative startups invited to present to Secretary of the Air Force Lee James and multiple representatives of DoD organizations. DIUx serves as the nexus between non-traditional companies operating at the bleeding edge, and the DoD. One of the conference focus, and surely the reason why Neurala was invited: the “third offset” initiative, and roles played in it by agile and innovative startups.

The third offset refers to the competitive advantage that the Defense Department has against upcoming adversaries. The first offset, in the 1950s, was that the US would depend upon its nuclear arsenal for deterrence against the larger Soviet army. The second offset, in the 1970s, was to use technological know-how for advanced guidance for highly accurate weapons delivery. Stealth technology and computerized command-and-control were essential parts of the second offset.

While the details of the third offset are not fully public, they appear to include the ability to decentralize control, allowing for autonomous sensing and operation, by using mix of smart machines and humans for decision making. And here is where startups like Neurala fit: fast moving companies, just tens of people strong, quickly innovating and deploying sophisticated Artificial Intelligence (AI) applications into off-the-shelf robots, drones, and mobile platforms.

Unlike traditional ways of doing business (large contractors, expensive projects, multiple years to get the deliverable deployed, with the risk of running over budget and early obsolescence), startups are fast in building viable proof of concepts, or even deploy products in the marketplace, by using what’s available to them. This includes AI applications, in particularly when deployed on Unmanned Aerial or Ground vehicles, or as mortal like me call them, drones and robots.

Unlike traditional ways of doing business (large contractors, expensive projects, multiple years to get the deliverable deployed, with the risk of running over budget and early obsolescence), startups are fast in building viable proof of concepts, or even deploy products in the marketplace, by using what’s available to them. This includes AI applications, in particularly when deployed on Unmanned Aerial or Ground vehicles, or as mortal like me call them, drones and robots.

The inescapable future of drones and robots

One of the hottest and most debated topics when addressing future scenarios of Unmanned Systems and their role in Defense is Artificial Intelligence (or ‘machine intelligence’) in substituting humans in the battlefield. Humans vs. machines, in essence.

As in many other cases, the truth is in the middle. There are some things that can be done better by humans and other things that can be done better by AI. The best of both worlds is when human intelligence and AI collaborate and one compensates the others weaknesses.

Modern machines can evaluate digital sensor information and make decisions faster than a human. It is difficult for a human to read and evaluate information while flying a modern jet with hundreds of sensors, or while controlling a swarm of smart drones. The information display would be enormous per unit time, and it would be hard to distinguish what is important from what is not. A computer can take all of the sensor information from these devices and analyze it rapidly, and present the human with ‘pre-digested’ information that discards the useless, and focuses on the useful and actionable. On the other hand, humans are still better at assembling the various pieces of the (pre-digested by AI) puzzle and taking important strategic decisions. At least for now…

The inescapable future …. is actually now: enabling technologies to fuel AI revolution

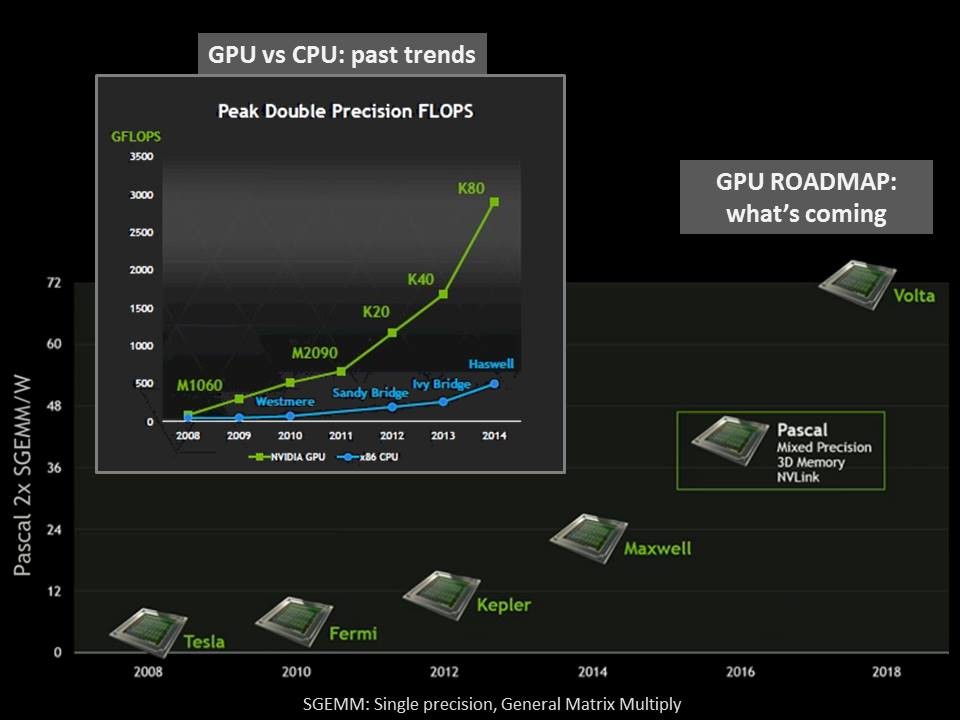

How futuristic is an AI working alongside war-fighters in the battlefields? I would argue it is so close we can almost touch it. Enabling computing technologies, such as Graphic processing units (GPUs), which were originally designed for computer gaming and 3D graphic processing, are today available to support the computationally intensive AI models. In real time, on a mobile machine such as a drone or a mobile ground robot. Is this because of some classified DARPA project? Not quite.. it’s because of more mundane appetite by consumers for video games and mobile graphics. Let’s look at the chart below: a comparison between GPU and CPUs as their computing power has scaled to in the past few years, and what is the next generation of GPUs from nVidia going to bring, as measured by single precision, general matrix multiply (SGEMM) computations.

E.g., the Volta-class GPU will be performing almost twice as better than the prior architecture, Pascal.

This may sound a bit too dry and out of context, but this could be translated into a simpler: “we could run twice as large an the artificial brain on Volta we could on Pascal”. And nVidia is only the tip of the iceberg: another fast-moving startup, Movidius, has introduced a different framework, called visual processing unit (VPU- a term also occasionally associated with GPUs), which provides another quantum leap into the battle between the two opposing “gods”: GFLOP and Watt.

In summary: the consumer world is producing state-of-the-art processors that can be just grabbed by startups like Neurala to design very sophisticated AI algorithms, and deploy them on consumer devices and drones at low cost & high deployment speed.

Let me give you an example of what it’s coming. Take a piece of software that emulates aspects of the brain visual system (including its ability to learn new items ‘on-the-fly’), and one of the cheapest, lower-cost CPU, and ARM processor running on an smart phone or tablet, and put them together. Here is what they can do.

Beware: Third Offset may be closer than it appears

Real-time object recognition running on low-cost unmanned vehicles is just one piece of the puzzle in the Third Offset strategy. However, other capabilities are being implemented within the same consumer-centric & market-friendly philosophy: low cost, fast time to market.

Not only will these capabilities be needed for the military offset, they will be necessary for many self-driving cars and autonomous civilian drones. The ability to integrate a wide variety of information sources – including information from various sensors, changing maps and traffic conditions – with the intuition of the human brain will make for powerful capabilities to come. And they better be here quickly, and affordably.