When designing robotic platforms, the choice of which sensors to employ is a key area that often determines the Go/No-Go for a final product. This is because the cost of sensors is a huge component of the total cost of robots, and the main challenge in front of effective commercialization of consumer robotic platforms and applications. This is true at all levels: from inexpensive consumer robots, to drones (which had payload issues, among others!), all the way to self-driving cars.

While inexpensive sensors are often inadequate in providing useful information about the robot’s environment, more complex sensors are very expensive.

What are the main sensors types?

From the purpose of robotics, we can distinguish between active and passive sensors.

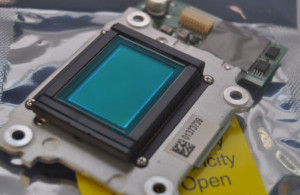

A passive sensor is an instrument/device designed to receive and to measure natural emissions produced by the environment. Passive sensors, therefore, do not emit energy: they rely on energy that is preexisting in the environment. As an example, a common digital camera found in consumer devices uses lens to focus light on an image pickup device, and encode images/videos digitally (rather than chemically, as in older cameras…remember?). Biological sensors, such as eyes and ears, also compute information by passively registering environmental energy.

A passive sensor is an instrument/device designed to receive and to measure natural emissions produced by the environment. Passive sensors, therefore, do not emit energy: they rely on energy that is preexisting in the environment. As an example, a common digital camera found in consumer devices uses lens to focus light on an image pickup device, and encode images/videos digitally (rather than chemically, as in older cameras…remember?). Biological sensors, such as eyes and ears, also compute information by passively registering environmental energy.

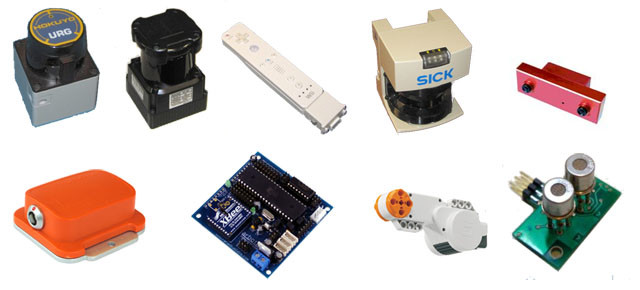

Active sensors, such as RADAR, LIDAR, Infrared, and so on work by emitting energy and measuring the distance by analyzing the reflected (or return) energy. The term active is well-placed: these sensors probe the environment with self-generated energy. For instance, LIDAR (Light Detection And Ranging) measures distance by illuminating a target with a laser and then analyzing the reflected light. A popular, inexpensive active sensor is Microsoft Kinect. In biology, active sensors are rare, and are limited to species living in environments where ambient energy is scarce. Examples include echolocation of bats and dolphins.

Active sensors, such as RADAR, LIDAR, Infrared, and so on work by emitting energy and measuring the distance by analyzing the reflected (or return) energy. The term active is well-placed: these sensors probe the environment with self-generated energy. For instance, LIDAR (Light Detection And Ranging) measures distance by illuminating a target with a laser and then analyzing the reflected light. A popular, inexpensive active sensor is Microsoft Kinect. In biology, active sensors are rare, and are limited to species living in environments where ambient energy is scarce. Examples include echolocation of bats and dolphins.

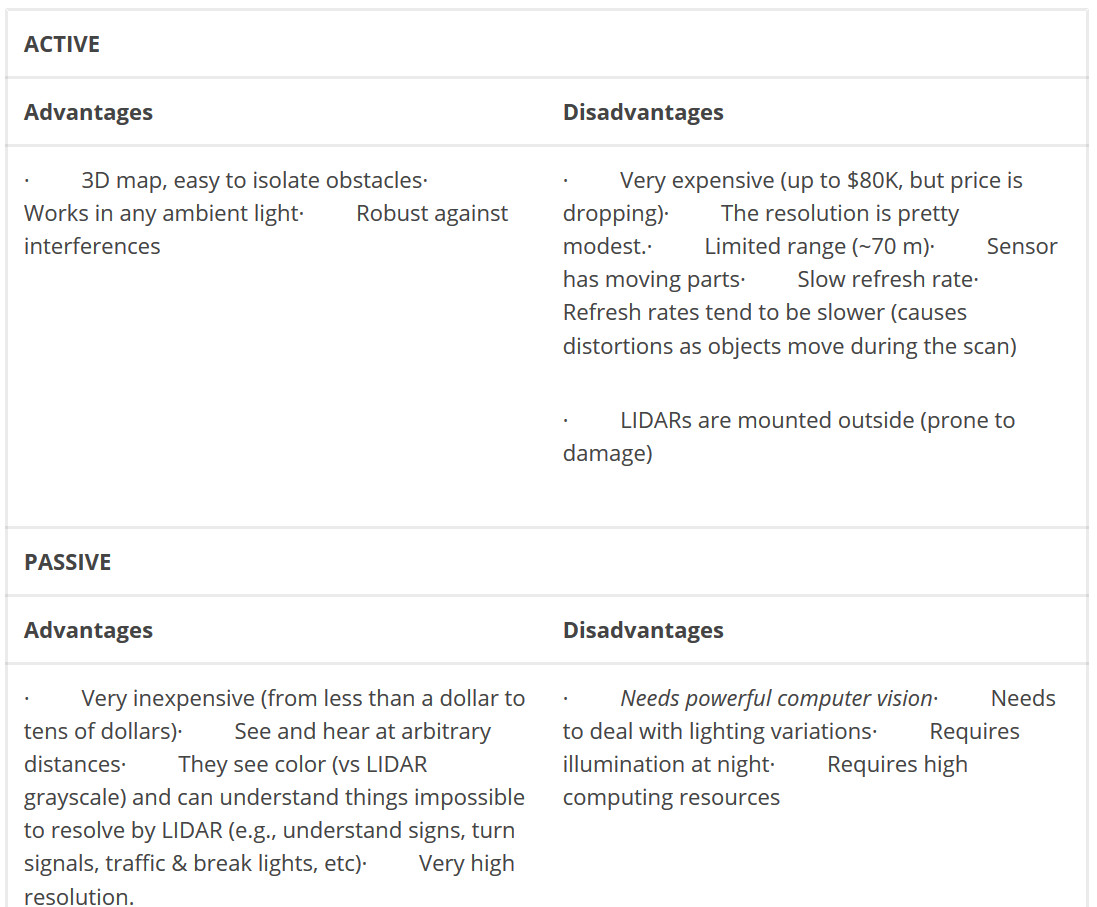

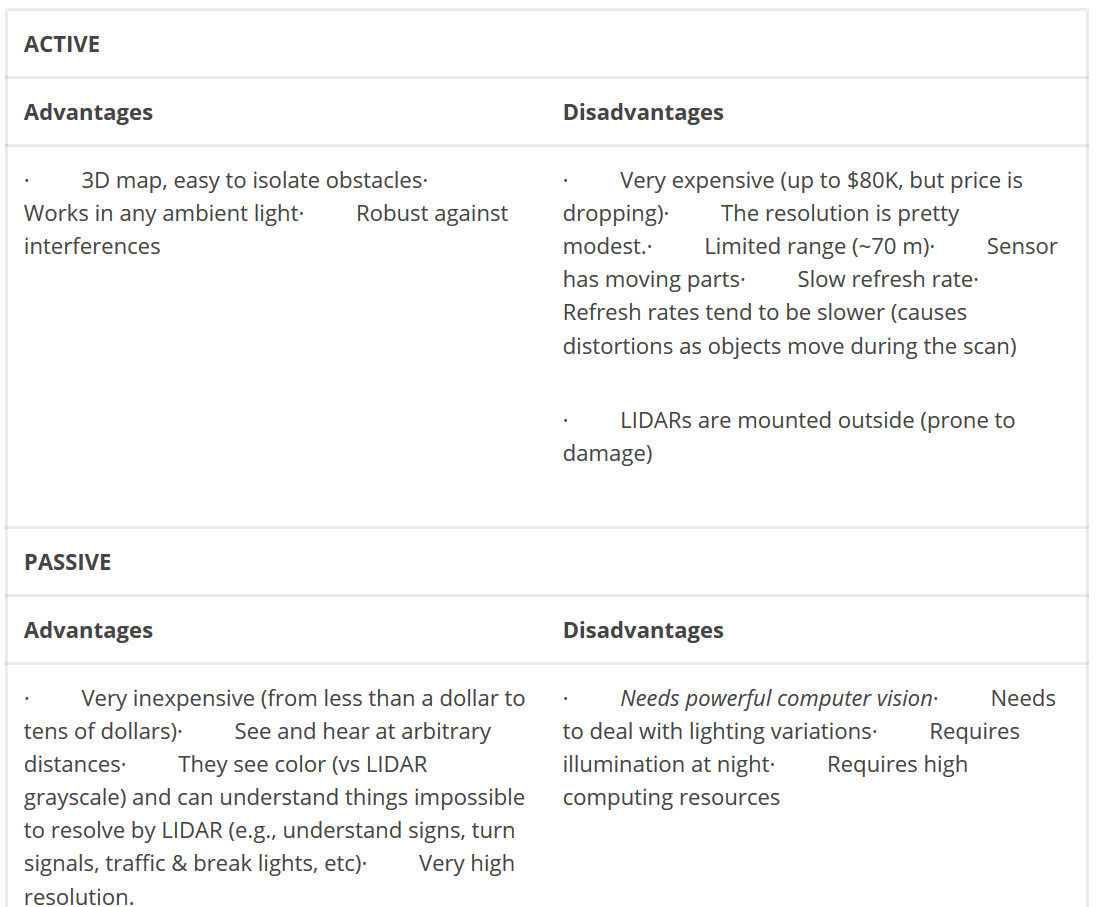

Each sensors have trade-offs, namely areas where they work best, and different price points.

Trade-Offs Between Active and Passive Sensors

The table below offers a summary of advantages and disadvantages for each sensor types.

What about stereo?

Somewhere conceptually in between monocular, passive sensors and active sensors lie passive stereo (two camera) vision. Much of the human brain, in terms of cortical space, is devoted to vision processing, and a major feature of the latter, common to all predator species, pertains depth perception using two eyes. Predators, unlike prays, have both eyes “pointing forward”: this is done to better estimate distance using purely optics via binocular depth perception, or stereo vision. Stereo vision gathers distances of objects and features by providing positional data that can be found from geometric relations among multiple unique viewing points. This is done by creating a disparity map of the target: an image whose pixels’ intensities correlate to depth (calculation of depth can be done by using simple 3-dtrigonometry or using the parallax formula). Disparity maps are particularly useful for robotic (from small robots, all the way to automotive and autonomous fixed-wing drones) because they encapsulate information essential to localization, segmentation, collision avoidance, navigation. Stereo is an area rich in research: it has been possible for many years to create full stereo maps, with limitations relative to resolving long distances (this varies depending on the relative distance between the two cameras). While being more processing-intensive, the benefit of stereo may offset other processing required to extract information from monocular images.

Somewhere conceptually in between monocular, passive sensors and active sensors lie passive stereo (two camera) vision. Much of the human brain, in terms of cortical space, is devoted to vision processing, and a major feature of the latter, common to all predator species, pertains depth perception using two eyes. Predators, unlike prays, have both eyes “pointing forward”: this is done to better estimate distance using purely optics via binocular depth perception, or stereo vision. Stereo vision gathers distances of objects and features by providing positional data that can be found from geometric relations among multiple unique viewing points. This is done by creating a disparity map of the target: an image whose pixels’ intensities correlate to depth (calculation of depth can be done by using simple 3-dtrigonometry or using the parallax formula). Disparity maps are particularly useful for robotic (from small robots, all the way to automotive and autonomous fixed-wing drones) because they encapsulate information essential to localization, segmentation, collision avoidance, navigation. Stereo is an area rich in research: it has been possible for many years to create full stereo maps, with limitations relative to resolving long distances (this varies depending on the relative distance between the two cameras). While being more processing-intensive, the benefit of stereo may offset other processing required to extract information from monocular images.

What to do? Active vs. Passive, the Software way

Cost is a main factor in robotics. This is not only true for consumer devices and low-cost robots. All platforms, from toys to cars and autonomous bulldozers, face the same issue: cost must remain low, and computing resources are scarce.

Cost is a main factor in robotics. This is not only true for consumer devices and low-cost robots. All platforms, from toys to cars and autonomous bulldozers, face the same issue: cost must remain low, and computing resources are scarce.

The best solution we envision today is leverage passive sensors and get inspiration from biology on how passive sensors alone can provide enough information (at least for biological systems) to conduct complex tasks such as object recognition, obstacle avoidance, and navigation. Low-cost sensing, when complemented with innovative use of software solutions, will provide the necessary technology substrate to power robotics to the next stage.